AI Deployment Challenges in Healthcare: Key Barriers and Solutions

09 SEPTEMBER

Healthcare is one of the various industry sectors worldwide being rapidly influenced by changes in AI. AI has demonstrated value for years in many areas of health and technology-related applications such as diagnostic imaging, triaging patients, and robotic surgery.

The global AI in healthcare market is expected to generate “US$187 billion by 2030,” according to Statista, clearly indicating the degree of investment by healthcare organisations into AI systems.

In theory, AI can do anything! It can identify tumours faster than radiologists, predict patient deterioration before it occurs, and recommend treatment options based on thousands of cases within seconds. The appeal is real, especially within a healthcare sector plagued by “staff shortages, rising costs“, and chronic disease management.

And yet, AI deployment challenges in Healthcare continue to stall real progress.

Why Healthcare Is Making Such Large AI Investments

There is pressure on hospitals, clinics, and healthcare systems across the globe to do more with less. AI offers an enticing promise:

- Faster, more accurate diagnoses.

- Predictive insights for early intervention.

- Remote patient monitoring and automation of repetitive tasks.

- Optimised resource allocation in hospitals.

These uses are no longer just theoretical possibilities; they are being piloted and implemented. Take, for instance, AI-enabled platforms that help oncologists find patterns in imaging scans that trained eyes might miss.

There are also predictive models to avoid readmissions, which help warn hospitals about which patients may be at risk before leaving the hospital.

The surge in telemedicine and digital health platforms post-COVID-19 has only accelerated interest in intelligent systems. AI is no longer seen as experimental; it’s becoming essential. But enthusiasm doesn’t always translate to execution.

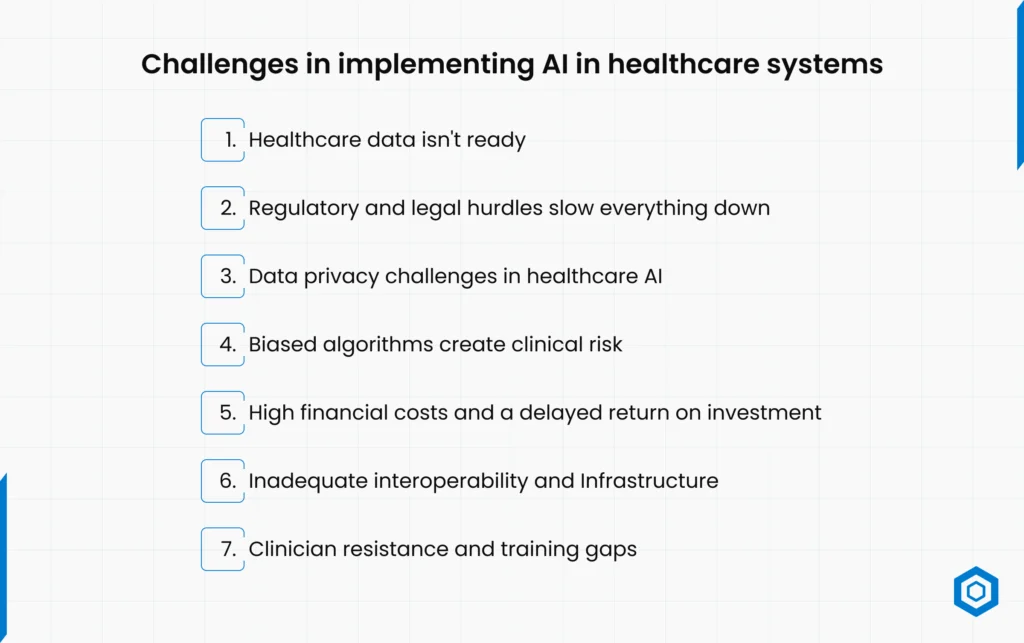

Challenges in implementing AI in healthcare systems

Despite growing demand for intelligent systems, healthcare AI deployment issues are anything but straightforward. From data issues to clinician pushback, here are the seven biggest AI deployment challenges in Healthcare that continue to delay widespread adoption.

- Healthcare Data Isn’t Ready

Many hospitals still use “legacy systems” and have messed up patient records. Medical data is stored in various formats, including organised, semi-structured, and unstructured, and on separate platforms. These conditions make it very hard to get data and train models.

Occasional availability of added-value data for AI is tainted by inconsistent data labelling, duplicate entries, and outdated data storage formats in datasets. The majority of organisations also lack the policies and infrastructure to generate high-quality data and share it in a way that allows for real-time access and usage.

- Regulatory and Legal Hurdles Slow Everything Down

Compliance is a moving target in Healthcare. Every AI model needs to adhere to complex rules: HIPAA in the U.S., GDPR in Europe, and local regulatory frameworks globally.

Yet, AI algorithms are often opaque, which creates absolute “health care AI regulatory compliance” issues. Healthcare organisations worry about liability, explainability requirements, and auditability of AI decisions when a patient is harmed.

The result? Most healthcare institutions hesitate to move forward until regulations become clearer or more AI-specific.

- Data privacy challenges in healthcare AI

Artificial Intelligence is based on data, and healthcare is highly regulated concerning privacy. The need for rich datasets and the responsibility to protect patient privacy continue to be a dilemma for hospitals.

Conveying anonymised data, regardless of the partners or departments involved, certainly raises red flags, and even more so if there are limits on trans-border data transfers. There could be some substantial commercial and reputational risk ensuing from a misconfiguration, illegal access, or breach of personal information.

This remains one of the most significant scalability issues, making it very challenging for healthcare organisations to utilise AI.

- Biased Algorithms create Clinical Risk

Bias isn’t just a fairness issue; it can lead to misdiagnosis or ineffective treatment. AI systems trained on incomplete or non-representative data can perform poorly for specific populations, including minorities, older adults, and individuals with rare diseases.

These models often lack clinical context, making them unreliable in real-world environments. Regulators and practitioners are rightfully cautious: if an AI system makes a flawed decision, who takes the blame?

To avoid “AI algorithm bias in healthcare” and still benefit from AI, hospitals have become increasingly concerned.

- High financial costs and a delayed return on investment

Deploying AI in Healthcare isn’t just about the technology; it’s about transforming infrastructure, retraining staff, and maintaining systems long term. The cost of all of this is high.

Organisations are understandably reluctant to take the plunge when they can’t prove a direct return on investment. Executives are also apprehensive about the unknown costs associated with vendor lock-in, upgrades, or integration with the order on which they have built their business.

Financial barriers to AI implementation in healthcare are, therefore, one of the most underestimated but powerful reasons we are all still waiting for some true AI disruption in Healthcare.

- Inadequate Interoperability and Infrastructure

Healthcare IT systems were never designed with AI in mind. Integrating intelligent algorithms into environments where systems don’t communicate with each other is a technical nightmare.

Imaging devices, electronic health record (EHR) systems, and even diagnostics lack standardisation in data formats, which creates a significant inefficiency across the entire healthcare system.

Furthermore, many providers face medical AI interoperability issues to support machine learning applications and the required scale, including GPUs and data lakes.

- Clinician Resistance and Training Gaps

Numerous clinicians remain sceptical about the reliability of AI, fearing potential replacement or diminished decision-making opportunities.

In addition, there is a significant training gap: healthcare professionals are frequently insufficiently prepared to describe, or use the outputs of AI, or take advantage of new tools as part of their workflow.

As long as organisations continue to focus on education, usability, and change management policies, Healthcare will face ongoing healthcare AI training challenges. It does not matter how sophisticated the technology becomes.

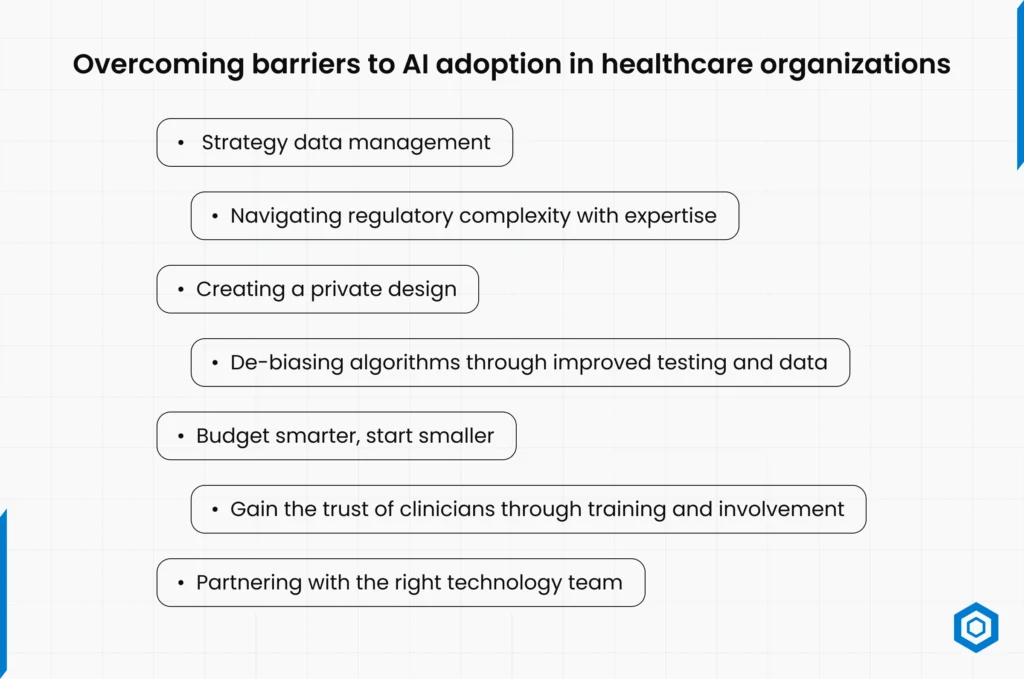

Overcoming Barriers to AI adoption in healthcare organizations

The AI deployment challenges in healthcare are complex, but they’re not insurmountable. With the right approach, tools, and partners, organisations can begin turning experimentation into real, scalable impact.

Let’s look at how these artificial intelligence healthcare barriers can be addressed strategically.

- Strategy Data Management

All data quality improvements start with good data governance and take time. Hospitals need centralised data policies, better documentation, and data engineering funding.

Structured data formats and “interoperable systems” can create AI-friendly environments.

Example: Migrating from paper-based records to cloud-based EHRs with real-time sync capabilities significantly improves the value of training data.

- Navigating Regulatory Complexity with Expertise

Instead of waiting for regulations to be implemented, healthcare organisations should collaborate with legal and compliance professionals who can understand the existing regulatory Environment.

In addition, AI models can be designed to be transparent and auditable, making them seem less daunting concerning future compliance with ‘healthcare AI regulatory compliance’.

Before expanding, companies can also test models in supervised, controlled settings to show their efficacy and safety.

- Creating a Private Design

Privacy doesn’t have to conflict with AI development. We develop new techniques – for example, “differential privacy, federated learning“, and “data anonymisation“: any of these could be used for model training, without relying on sensitive data.

The explicit consent flows for patients (for example: “this is how we will use your data”) and AI governance frameworks can provide reassurance to regulators and patients, respectively.

- De-biasing Algorithms through Improved Testing and Data

Fighting bias begins with data marked by representativeness. Healthcare organisations need to be conscious of how they acquire purposefully diverse and inclusive training data.

To help detect and address potential biases, it is also advised that algorithms go through continuous model validation against actual clinical data. Many top-tier hospitals are putting together AI ethics boards to monitor fairness and profitability in algorithmic decision-making.

- Budget Smarter, Start Smaller

When considering the expense of AI, you can focus on use cases that can yield a definitive ROI, e.g., think about whether automation for billing analytics or appointment booking is cheaper than engaging a diagnostic AI platform.

Before growing, teams can learn, iterate, and show business value by starting small. Collaborations with AI companies that specialise in Healthcare also lessen the need for internal tech teams.

- Gain the Trust of Clinicians Through Training and Involvement

AI should augment human clinicians, not replace them. By including professionals in the development and testing of AI tools, the tools will be helpful and relevant.

It is also essential to invest time and effort into both staff training and change management, as well as into understanding the bigger picture of creating simple interfaces.

Trust is built when clinicians understand how a model functions, why it is making recommendations, and what the process entails if the clinician disagrees with the model.

- Partnering with the Right Technology Team

Implementing AI solutions in Healthcare takes more than technical know-how; it requires a deep understanding of compliance, clinical workflows, and data protection.

A trusted technology partner like Codeflash Infotech can help you meet the regulatory barriers, develop interoperable systems, and ensure responsible data governance. Their ultimate aim is to make deploying your AI to production easier and faster without compromising safety.

They will develop systems that can deliver punch lists of auditable, secure data that can be deployed in the reality of modern-day clinical scenarios. With their support, healthcare organisations can move from pilot to production with greater confidence and control.

Conclusion

Doctors will never be replaced by AI, but AI-using doctors might. That is the future we are hurtling toward. But the road is not just paved with innovation. From Data to Regulation to trust, the “AI adoption challenges healthcare” demand thoughtful and strategic navigation.

Yet the first question isn’t “What can we build?” but “Are we ready to build it the right way?”

By tackling foundational healthcare AI implementation challenges from the start, institutions can scale up safely, sustainably, and speedily go from a successful pilot to larger-scale solutions. AI needs to adapt, even though it’s simpler said than done.

Frequently Asked Questions

Healthcare data quality challenges, confusing regulations, cost, lack of medical AI infrastructure requirements, biased algorithms, and staff resistance are among the most significant hurdles. They represent the primary artificial intelligence healthcare barriers to successful AI adoption in Healthcare.

Privacy laws like HIPAA and GDPR restrict how patient data can be stored, shared, and used, especially for training AI systems. Healthcare organisations must create mechanisms that protect privacy first and make sure that everyone follows the rules at every step of the process.

The absence of clean, standardised, and interoperable data remains the single most significant limitation. If the data itself is not reliable, every AI tool, no matter how sophisticated, will never produce consistent, safe, or reliable findings.